Fastest image resizer

Recently I wanted to resize over 1000 pictures in high resolution from my camera with more than 4.000 pixels in width. Usually I use ACDSee software for the job. It has a lots of settings and the results are pretty good. However it's a commercial software and runs only on Windows.

For that reason I wrote a simple script in Python for batch resizing of images, it uses PILLOW imaging library and works good enough on my mac.

from PIL import Image image = Image.open(input) resized = image.resize(new_size, Image.ANTIALIAS) resized.save(output)

However waiting for 1000 pictures can be a pain when the average speed of a single resize operation is over 1 second. I started wondering if there is something faster and after some digging on Internet I decided to implement the fastest batch resizer on my own.

First I split the problem into 4 main parts:

Speed of reading/writing files from the disk depends on many variables, but mostly on operating system. For Windows it's best to use win32 API call:

Based on my tests it is at least 2x faster than fread. For OSX the fastest way is to use C-style fread as it showed to be approximately 4x faster than using c++ fstream. I tried to use fixed buffer and read the file in the loop but it didn't make any significant difference, reading the whole file at once is just fine.

First I split the problem into 4 main parts:

- Serialization and deserialization.

- Decoding and Encoding of JPEG image.

- Resizing.

- Comparison, is it really the fastest solution?

Serialization/deserialization

Speed of reading/writing files from the disk depends on many variables, but mostly on operating system. For Windows it's best to use win32 API call:

DWORD dwBytesRead = 0; HANDLE hFile = CreateFile(fileName.c_str(), GENERIC_READ, FILE_SHARE_READ, NULL, OPEN_EXISTING, FILE_ATTRIBUTE_NORMAL, NULL); unsigned long size = GetFileSize(hFile, NULL); data.resize(size); ReadFile(hFile, (char*)(&(data)[0]), size, &dwBytesRead, NULL);

Based on my tests it is at least 2x faster than fread. For OSX the fastest way is to use C-style fread as it showed to be approximately 4x faster than using c++ fstream. I tried to use fixed buffer and read the file in the loop but it didn't make any significant difference, reading the whole file at once is just fine.

FILE* file = std::fopen(fileName.c_str(), "rb"); if (file) { fseek(file, 0, SEEK_END); long size = ftell(file); rewind(file); data.resize(size); fread((char*)(&(data)[0]), 1, size, file); fclose(file); }

Decoding and Encoding of JPEG image

Now we have the binary data but without decoding to RGB it's kind of useless. Standard library for decoding JPEG images is libjpeg, it is used by majority of commercial and open source software solutions for image processing. Fortunately there exists faster version libjpeg-turbo, heavily optimized for SIMD instruction set (MMX, SSE2, NEON). It should give us decoding speed up to 400% of standard libjpeg library.

I used TURBO-jpeg framework with simple interface for encoding images, example of decoding an image:

bool JPEGImage::decode() { int jpegSubsamp, ret; tjhandle jpegDecompressor = tjInitDecompress(); ret = tjDecompressHeader2(jpegDecompressor, m_data->data(), m_data->size(), &m_width, &m_height, &jpegSubsamp); if (!ret) { unsigned long size = static_cast < unsigned long> (m_width *m_height * 3); m_buffer->resize(size); ret = tjDecompress2(jpegDecompressor, m_data->data(), m_data->size(), m_buffer->data(), m_width, 0, m_height, 3, TJFLAG_FASTDCT | TJFLAG_NOREALLOC); tjDestroy(jpegDecompressor); } return ret == 0; }

Resizing

Now comes the main part. Resizing is nothing else than re-sampling of the image pixels. There are several methods but all of them have the same concept, value of every pixel must be read, weighted by neighboring pixels and written to output image.

Naive solution would be to go through every pixel one by one, read the color value, make some averaging with neighboring pixels and write it to output image. But we won't do that because this problem is perfect for highly parallel algorithm. And I am not talking about CPU parallelism, in time when my graphic card has more than 500 processing units GPU calculations seem like an ultimate solution to our problem.

There has been a wide research of general purpose processing on GPU in past years. Today there exist three major frameworks, OpenCL, CUDA and DirectCompute. CUDA is the oldest one, released in 2007 by NVIDIA and still actively developed. However the biggest disadvantage is that it runs only on NVIDIA graphic cards. DirectCompute was made by Microsoft as a part of DirectX11 and has its own limitations, it runs only on Windows 7 and higher on DirectX 10+ powered apps. Lastly OpenCL, developed by Khronos Group, can execute programs across different platforms including CPUs, GPUs, signal processors and other units. The API is highly mature and has the same or even better power than CUDA, perfect for this application.

It takes a while to get in OpenCL but after you get over the hard part, it will reveal you possibilities you could only dream about. Hundreds of processors with thousands of running threads can give you a fantastic performance boost.

Unlike the CPU the OpenCL is suitable for applications consisting of lots of small tasks that can run in parallel. For us the small task is a single operation over one pixel. In case of an image with resolution 4.000 x 4.000 pixels there are together 16 million tasks. OpenCL will do all the heavy work for us, we just need to write a program for one pixel, in OpenCL terminology this program is called kernel. Graphic programmers will find lots of similarities with fragment shaders.

__kernel void resize_nn(__read_only image2d_t source, __write_only image2d_t dest, struct SImage src_img, struct SImage dst_img, float ratioX, float ratioY) { const int gx = get_global_id(0); const int gy = get_global_id(1); const int2 pos = { gx, gy }; if (pos.x >= dst_img.Width || pos.y >= dst_img.Height) return; float2 srcpos = {(pos.x + 0.4995f) / ratioX, (pos.y + 0.4995f) / ratioY}; int2 SrcSize = (int2)(src_img.Width, src_img.Height); float4 value; int2 ipos = convert_int2(srcpos); if (ipos.x< 0 || ipos.x >= SrcSize.x || ipos.y< 0 || ipos.y >= SrcSize.y) value = 0; else value = read_imagef(source, sampler, ipos); write_imagef(dest, pos, value); }

This small program is an example of OpenCL kernel responsible for resizing image data, it is a very simple algorithm (nearest neighbor) and is shown here just for reference. In the real application I am using more complicated algorithm for best image quality. The kernel program is compiled and uploaded to GPU together with image data and when the calculation is finished the image data are downloaded back to system memory. This transfer can cost some time and it depends on the image size and speed of your memory bandwidth.

Comparison

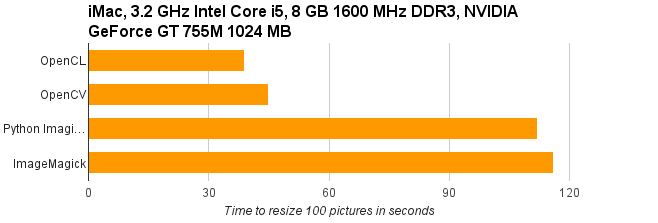

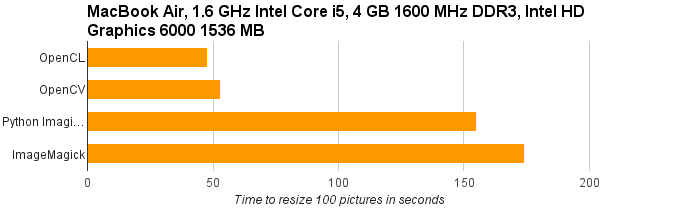

I have created series of tests comparing time of various batch resizers on Windows 7, iMac and MacBook Air. Machine with Windows 7 has ATI Radeon graphic card, iMac has GeForce from NVIDIA and lastly MacBook Air comes with an integrated video card Intel HD. I tried to find the fastest image processor available for a good comparison and I picked OpenCV framework which is powered by CUDA and should run extremely well on NVIDIA graphic cards. It has also some sort of OpenCL support but based on latest code, it is quite limited.

Tested resizers:

- OpenCL batch resizer

- Python imaging

- Imagemagick

- OpenCV (Python wrapper)

- Adobe Photoshop CS6 (win7)

- ACDSee 6.0 (win7)

I have prepared 100 high resolution images in JPEG format. During the tests all images were resized to 50% of the original size with cubic interpolation algorithm. Every test is run multiple-times and averaged for the most accurate results.

In the first test on Win7 machine the OpenCL resizer is the clear winner of the test. Second OpenCV is much slower because it has been optimised for NVIDIA graphic cards, but this might change in the future versions of OpenCV. Other resizers are far behind because of using CPU only.

Second test was run on iMac with NVIDIA graphic card. OpenCL is still a little bit faster than OpenCV, probably because of the optimised JPEG decoding library. Both resizers are using different frameworks, it's CUDA vs OpenCL and it's clear they are equally powerful.

Third test was run on MacBook Air with an integrated graphic card Intel HD. Results are similar to iMac test. Here is is interesting that OpenCV resizer is probably using OpenCL under the hood because CUDA is not possible here.

Conclusion

The OpenCL resizer is the fastest one with noticeable difference on Windows platform. However on mac it is performing almost the same as the OpenCV framework. We can expect improvements of OpenCV in the future versions.

References

- "OpenCL Integrated Performance Primitives - A library of optimized OpenCL image processing functions", https://github.com/CRVI/OpenCLIPP

- "The big blob", http://www.thebigblob.com/category/opencl/

- "Dr. Dobb's", http://www.drdobbs.com/parallel/a-gentle-introduction-to-opencl/231002854